Reference News Network — April 7

According to CNBC on April 5, as energy-intensive machine learning models remain the hottest frontier in the tech industry, Google unveiled details Wednesday about an artificial intelligence (AI) supercomputer it claims is faster and more energy-efficient than rival systems from NVIDIA.

While NVIDIA dominates the AI model training and inference market with over 90% share, Google has been designing and deploying its own AI chips—called Tensor Processing Units (TPUs)—since 2016.

Google is a major leader in AI, with its researchers responsible for some of the field’s most significant breakthroughs over the past decade. Yet critics argue the company has lagged in commercializing its technological innovations.

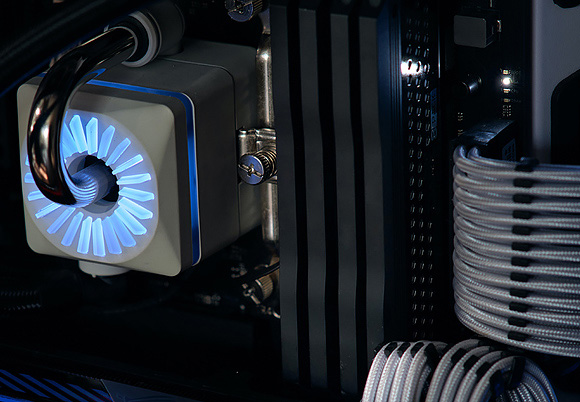

AI models and products—such as Google’s Bard or OpenAI’s ChatGPT, which runs on NVIDIA’s A100 chips—require massive computing clusters comprising tens of thousands of chips. These systems must operate continuously for weeks or even months to train a single large model.

On Tuesday, Google said it had built a system integrating more than 4,000 TPUs, enhanced with custom-designed components specifically optimized for running and training AI models. This system has been operational since 2020 and was used to train Google’s PaLM model—a competitor to OpenAI’s GPT—for over 50 days.

Google researchers wrote that their TPU-based supercomputer, known as TPU v4, “achieves 1.2 to 1.7 times the speed of NVIDIA’s A100 chips while consuming less energy.”

They added: “Its performance, scalability, and availability make the TPU v4 supercomputer a workhorse for large language models.”

However, the researchers noted that Google’s TPU was not benchmarked against NVIDIA’s newest AI chip, the H100, which leverages more advanced manufacturing technology.

On Wednesday, MLPerf—the industry-wide benchmarking suite for AI hardware—released its latest results and rankings. NVIDIA CEO Jensen Huang stated that the new H100 chip significantly outperforms its predecessor.

In a blog post, Huang wrote: “Today’s MLPerf 3.0 results highlight that the H100 delivers four times the performance of the A100.” He added, “The next phase of generative AI demands new AI infrastructure capable of training large language models with high energy efficiency.”

The enormous energy consumption required by AI computing is extremely costly, prompting many companies to focus on developing new chips, optical interconnects, or software techniques to reduce power demands.

Cloud providers like Google, Microsoft, and Amazon also stand to benefit from lowering AI’s energy footprint. They rent out computing capacity by the hour and often offer startups credits or free compute time to build relationships. (Notably, Google Cloud also sells compute time powered by NVIDIA chips.)